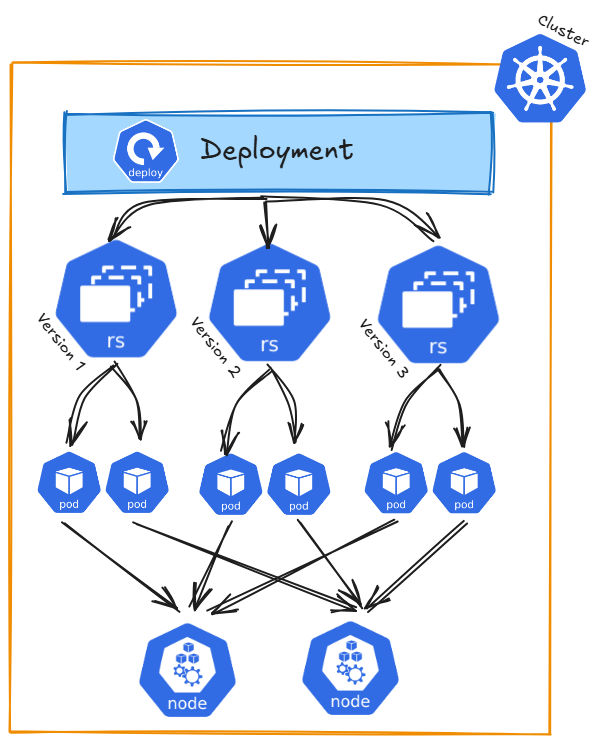

Deployment / ReplicaSet

Useful Links

- Kubernetes Official Documentation

-

Using kubectl to Create a Deployment

https://thenewstack.io/kubernetes-deployments-work/

Architecture

Detailed Description

InKubernetes Kubernetes,Deployments aare Service is a waydesigned to exposemanage anstateless services in your cluster and are designed to define the desired state of your application.

The Desired State describes how your application runningshould insidelook ain setKubernetes. ofYou tell Kubernetes:

- How many Pods

asshouldabenetworkrunning? - Which

ItcontainerprovidesimageashouldstablebeIPused? - What

andconfigurationsDNS(e.g.,name,environmentallowingvariables,accessports)eithershouldfrombeoutsideapplied?

A ServiceDeployment serves as an abstraction layer, connecting clients to the appropriate Pods, ensuringensures that the actual Podsstate behindin the Servicecluster canmatches changethe withoutdesired disruptingstate. access.For example, if a Pod crashes, Kubernetes automatically creates a new one to maintain the desired state.

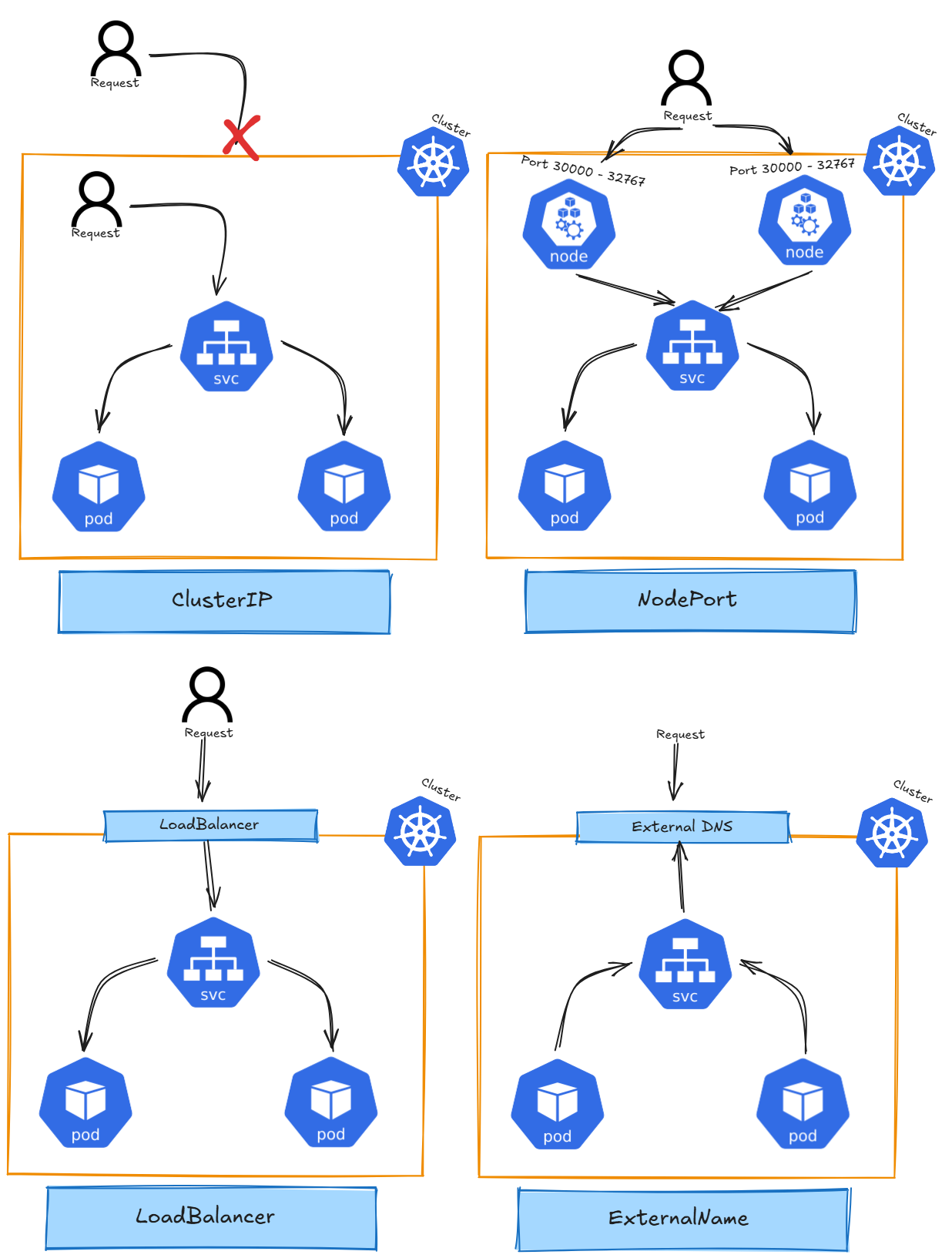

ThereKey are different types of Services in Kubernetes, including:Features

-

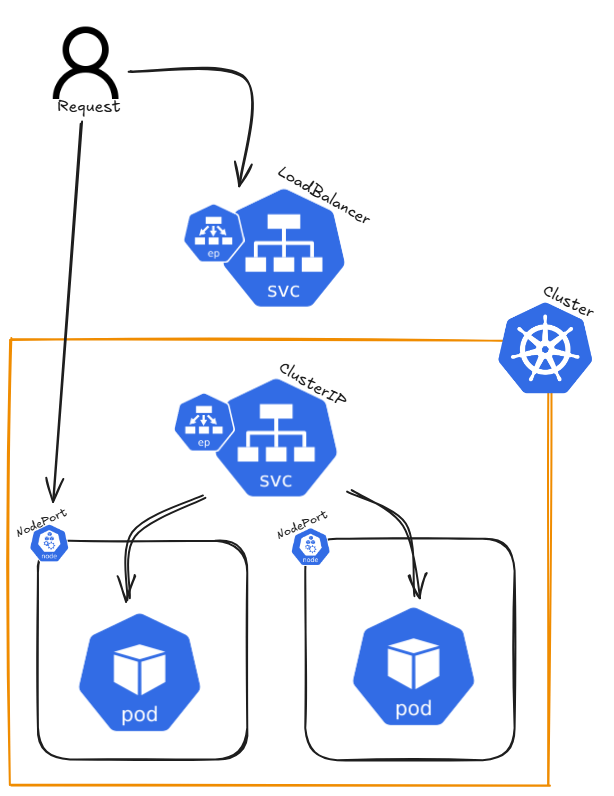

ClusterIP:Rollbacks:TheRevertdefaulttoserviceatypepreviousthatversionassignsduring aninternalupdateIP,issuereachableusingonlykubectlwithinrolloutthe cluster. It allows communication between Pods inside the cluster.Kube-Proxyload balances traffic across pods behind a ClusterIP Service.undo. -

NodePort:Rolling Updates:ExposesSmooththetransitionsservicebetweenonversions,asupportedstaticby:port-

allmaxSurge:nodesTemporary increase inthePodscluster,duringallowingupdatesexternal(e.g.,traffic25%). -

accessmaxUnavailable:theAllowableservice.percentage of Pods down during updates (e.g., 25%).

acrossto -

-

LoadBalancer:Scaling:InAdjustcloudreplicaenvironments,countsthiswithservicekubectltype provisions an external load balancer to distribute traffic to multiple Pods.scale. -

ExternalName:Monitoring Updates:MapsUseakubectlservicerollout status toantrackexternalrolloutDNS name, allowing Kubernetes services to refer to external resources.progress. -

Headless

ADeployments typemanage ofReplicaSets, ClusterIPprimarily servicedue withto historical reasons. There is no assignedpractical IP. It allows direct accessneed to Podsmanually withoutcreate ReplicaSets anymore, as Deployments provide a proxy.more

Endpoints are associated with a Serviceuser-friendly and representfeature-rich the IP addresses of the Pods that match the Service's selector. When a Service is created, Kubernetes automatically creates Endpointsabstraction for it,managing enablingapplication trafficlifecycle, forwardingincluding toreplication, theupdates, correctand Pods.rollbacks.

Command Reference Guide

Cluster IP

# Create nginx deployment with three replicas

kubectl create deployment nginx --image=nginxdemos/hello --port=80 --replicas=3

# Expose application as ClusterIP with port 8080 (ClusterIP is the default if not defined)

kubectl expose deployment nginx --type=ClusterIP --port=8080 --target-port=80

# --port=8080: The port exposed by the service (used internally to access the deployment)

# --target-port=80: The port on the pods where the application is running

# Get services

kubectl get service nginx -o wide

# Get full resource description using describe

kubectl describe service/nginx

# Get created endpoints

kubectl get endpoints

# curl by default service DNS entry

# Each curl request gets a different hostname due to Kubernetes' Kube-Proxy load balancing

curl nginx.default.svc.cluster.local

# Delete service

kubectl delete service/nginx

NodePort:

# Create nginx deployment with three replicas

kubectl create deployment nginx --image=nginxdemos/hello --port=80 --replicas=3

# Expose application as NodePort

kubectl expose deployment/nginx --type=NodePort

# Get services

kubectl get service nginx -o wide

# first Port = application;second Port = NodePort

# Get full resource description using describe

kubectl describe service/nginx

# Delete service

kubectl delete service/nginx

LoadBalancer

# Create nginx deployment with three replicas

kubectl create deployment nginx --image=nginxdemos/hello --port=80 --replicas=3

# Expose application as LoadBalancer

kubectl expose deployment/nginx --type=LoadBalancer --port 8080 --target-port 80

# Get services

kubectl get service nginx -o wide

# first Port = application;second Port = NodePort

# Get full resource description using describe

kubectl describe service/nginx

# Delete service

kubectl delete service/nginx

ExternalName

# Query running pods

kubectl get pods

# Query detailed informatoin about pods

kubectl get pods -o wide

# Create single pod

kubectl run nginx --image=nginx

# Run image / pass environment and command

kubectl run --image=ubuntu ubuntu --env="KEY=VALUE" -- sleep infinity

# Get yaml configuration for the resource

kubectl run nginx --image=nginx --dry-run=client -o yaml | tee nginx.yaml

# Get specific information of any yaml section

kubectl explain pod.spec.restartPolicy

# Create pod resource from yaml configuration file

kubectl create -f nginx.yaml

# Apply pod resource from yaml configuration

kubectl apply -f nginx.yaml

# Delete pod resource wihtout waiting for graceful shutdown of application (--now)

kubectl delete pod/nginx pod/ubuntu --now

# Get full resource description using descripe

kubectl describe pod/nginx

# Get logs for a specific container in the pod

kubectl logs pod/nginx -c nginx

# If a pod fails use -p to get previouse logs for a specific container in the pod

kubectl logs pod/nginx -c nginx -p

# Combine pod creation

kubectl run nginx --image=nginx --dry-run=client -o yaml | tee nginx.yaml

kubectl run ubuntu --image=ubuntu --dry-run=client -o yaml | tee ubuntu.yaml

{ cat nginx.yaml; echo "---"; cat ubuntu.yaml; } | tee multi_pods.yaml

kubectl apply -f multi_pods.yaml

Headless Service

# Query running pods

kubectl get pods

# Query detailed informatoin about pods

kubectl get pods -o wide

# Create single pod

kubectl run nginx --image=nginx

# Run image / pass environment and command

kubectl run --image=ubuntu ubuntu --env="KEY=VALUE" -- sleep infinity

# Get yaml configuration for the resource

kubectl run nginx --image=nginx --dry-run=client -o yaml | tee nginx.yaml

# Get specific information of any yaml section

kubectl explain pod.spec.restartPolicy

# Create pod resource from yaml configuration file

kubectl create -f nginx.yaml

# Apply pod resource from yaml configuration

kubectl apply -f nginx.yaml

# Delete pod resource wihtout waiting for graceful shutdown of application (--now)

kubectl delete pod/nginx pod/ubuntu --now

# Get full resource description using descripe

kubectl describe pod/nginx

# Get logs for a specific container in the pod

kubectl logs pod/nginx -c nginx

# If a pod fails use -p to get previouse logs for a specific container in the pod

kubectl logs pod/nginx -c nginx -p

# Combine pod creation

kubectl run nginx --image=nginx --dry-run=client -o yaml | tee nginx.yaml

kubectl run ubuntu --image=ubuntu --dry-run=client -o yaml | tee ubuntu.yaml

{ cat nginx.yaml; echo "---"; cat ubuntu.yaml; } | tee multi_pods.yaml

kubectl apply -f multi_pods.yaml

Hints

When accessing an external IP (e.g., Node1's external IP), the hostname and IP displayed on the website may not change. To test Kubernetes' load-balancing behavior, cordon Node1 and delete the pod running on it. When you call Node1's IP again, kube-proxy will reroute the traffic to a healthy pod on another node.

Open questions

Why is the service DNS not reachable after creating service?? curl nginx.default.svc.cluster.local

My personal summary