Deployment / ReplicaSet

Useful Links

Architecture

Detailed Description

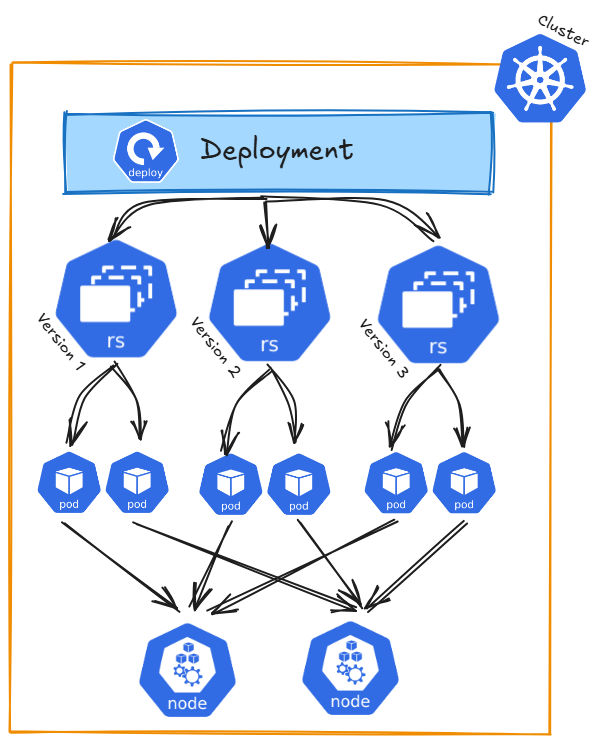

Kubernetes Deployments are designed to manage stateless services in your cluster and are designed to define the desired state of your application. The Desired State describes how your application should look in Kubernetes. You tell Kubernetes:

- How many Pods should be running?

- Which container image should be used?

- What configurations (e.g., environment variables, ports) should be applied?

A Deployment ensures that the actual state in the cluster matches the desired state. For example, if a Pod crashes, Kubernetes automatically creates a new one to maintain the desired state.

Key Features

- Rollbacks: Revert to a previous version during an update issue using kubectl rollout undo.

-

Rolling Updates: Smooth transitions between versions, supported by:

- maxSurge: Temporary increase in Pods during updates (e.g., 25%).

- maxUnavailable: Allowable percentage of Pods down during updates (e.g., 25%).

- Scaling: Adjust replica counts with kubectl scale.

- Monitoring Updates: Use kubectl rollout status to track rollout progress.

Deployments manage ReplicaSets, primarily due to historical reasons. There is no practical need to manually create ReplicaSets anymore, as Deployments provide a more user-friendly and feature-rich abstraction for managing application lifecycle, including replication, updates, and rollbacks.

Command Reference Guide

Remeber to use dry-run and tee to check the configuration of each command first.

--dry-run=client -o yaml | tee nginx-deployment.yaml

# Create nginx deployment with the default of one replica

kubectl create deployment nginx --image=nginxdemos/hello --port=80

# Create nginx deployment with three replicas

kubectl create deployment nginx --image=nginxdemos/hello --port=80 --replicas=3

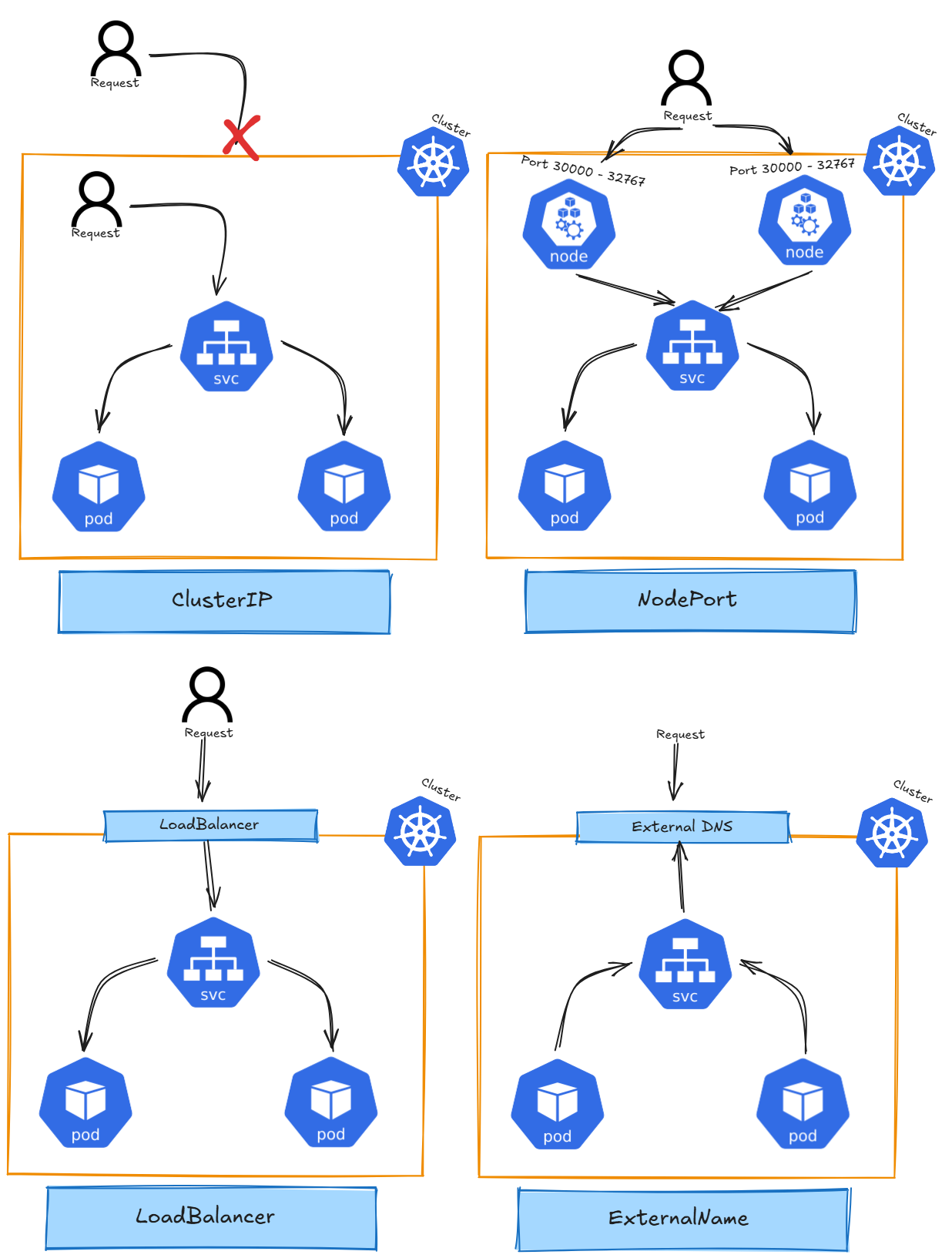

# Expose application as ClusterIP with port 8080 (ClusterIP is the default if not defined)

kubectl expose deployment nginx --type=ClusterIP --port=8080 --target-port=80

# --port=8080: The port exposed by the service (used internally to access the deployment)

# --target-port=80: The port on the pods where the application is running

# Get services

kubectl get service nginx -o wide

# Get full resource description using describe

kubectl describe service/nginx

# Get created endpoints

kubectl get endpoints

# curl by default service DNS entry

# Each curl request gets a different hostname due to Kubernetes' Kube-Proxy load balancing

curl nginx.default.svc.cluster.local

# Delete service

kubectl delete service/nginx

NodePort:

# Create nginx deployment with three replicas

kubectl create deployment nginx --image=nginxdemos/hello --port=80 --replicas=3

# Expose application as NodePort

kubectl expose deployment/nginx --type=NodePort

# Get services

kubectl get service nginx -o wide

# first Port = application;second Port = NodePort

# Get full resource description using describe

kubectl describe service/nginx

# Delete service

kubectl delete service/nginx

LoadBalancer

# Create nginx deployment with three replicas

kubectl create deployment nginx --image=nginxdemos/hello --port=80 --replicas=3

# Expose application as LoadBalancer

kubectl expose deployment/nginx --type=LoadBalancer --port 8080 --target-port 80

# Get services

kubectl get service nginx -o wide

# first Port = application;second Port = NodePort

# Get full resource description using describe

kubectl describe service/nginx

# Delete service

kubectl delete service/nginx

ExternalName

# Query running pods

kubectl get pods

# Query detailed informatoin about pods

kubectl get pods -o wide

# Create single pod

kubectl run nginx --image=nginx

# Run image / pass environment and command

kubectl run --image=ubuntu ubuntu --env="KEY=VALUE" -- sleep infinity

# Get yaml configuration for the resource

kubectl run nginx --image=nginx --dry-run=client -o yaml | tee nginx.yaml

# Get specific information of any yaml section

kubectl explain pod.spec.restartPolicy

# Create pod resource from yaml configuration file

kubectl create -f nginx.yaml

# Apply pod resource from yaml configuration

kubectl apply -f nginx.yaml

# Delete pod resource wihtout waiting for graceful shutdown of application (--now)

kubectl delete pod/nginx pod/ubuntu --now

# Get full resource description using descripe

kubectl describe pod/nginx

# Get logs for a specific container in the pod

kubectl logs pod/nginx -c nginx

# If a pod fails use -p to get previouse logs for a specific container in the pod

kubectl logs pod/nginx -c nginx -p

# Combine pod creation

kubectl run nginx --image=nginx --dry-run=client -o yaml | tee nginx.yaml

kubectl run ubuntu --image=ubuntu --dry-run=client -o yaml | tee ubuntu.yaml

{ cat nginx.yaml; echo "---"; cat ubuntu.yaml; } | tee multi_pods.yaml

kubectl apply -f multi_pods.yaml

Headless Service

# Query running pods

kubectl get pods

# Query detailed informatoin about pods

kubectl get pods -o wide

# Create single pod

kubectl run nginx --image=nginx

# Run image / pass environment and command

kubectl run --image=ubuntu ubuntu --env="KEY=VALUE" -- sleep infinity

# Get yaml configuration for the resource

kubectl run nginx --image=nginx --dry-run=client -o yaml | tee nginx.yaml

# Get specific information of any yaml section

kubectl explain pod.spec.restartPolicy

# Create pod resource from yaml configuration file

kubectl create -f nginx.yaml

# Apply pod resource from yaml configuration

kubectl apply -f nginx.yaml

# Delete pod resource wihtout waiting for graceful shutdown of application (--now)

kubectl delete pod/nginx pod/ubuntu --now

# Get full resource description using descripe

kubectl describe pod/nginx

# Get logs for a specific container in the pod

kubectl logs pod/nginx -c nginx

# If a pod fails use -p to get previouse logs for a specific container in the pod

kubectl logs pod/nginx -c nginx -p

# Combine pod creation

kubectl run nginx --image=nginx --dry-run=client -o yaml | tee nginx.yaml

kubectl run ubuntu --image=ubuntu --dry-run=client -o yaml | tee ubuntu.yaml

{ cat nginx.yaml; echo "---"; cat ubuntu.yaml; } | tee multi_pods.yaml

kubectl apply -f multi_pods.yaml

Hints

WhenDeployments accessingmanage anReplicaSets, externalprimarily IPdue (e.g.,to Node1'shistorical externalreasons. IP),There theis hostnameno practical need to manually create ReplicaSets anymore, as Deployments provide a more user-friendly and IPfeature-rich displayedabstraction onfor themanaging websiteapplication maylifecycle, notincluding change.replication, To test Kubernetes' load-balancing behavior, cordon Node1updates, and delete the pod running on it. When you call Node1's IP again, kube-proxy will reroute the traffic to a healthy pod on another node.rollbacks.

Open questions

Why is the service DNS not reachable after creating service?? curl nginx.default.svc.cluster.local./.

My personal summary